To watch the recording of this event, click here

Introduction:

On March 8, 2023, Human Rights Now (HRN) hosted a Zoom webinar as part of the NGO CSW Forum held between March 5-17, 2023, in parallel to the 67th session of the United Nations Committee on the Status of Women (CSW). The webinar focused on the non-consensual filming and distribution of pornographic videos and featured five expert panelists who provided insights and recommendations on how to address this issue. The panelists also presented various legal measures and best practices from around the world to combat the distribution of non-consensual pornographic videos.

A summary of each panelist’s presentation can be found below.

Panelists:

- Lorna Woods: Professor of Internet Law at University of Essex

- Sophie Mortimer: Manager of Revenge Porn Helpline &StopNCII.org

- Michelle Gonzalez: Executive Director of Cyber Civil Rights Initiative (CCRI)

- Kazuko Ito: Vice-President of Human Rights Now, attorney-at-law

- Cindy Gallop: Founder and CEO of MakeLoveNotPorn

Moderator:

- Hiroko Goto: Vice-President of Human Rights Now, Professor of Chiba University Law School

Today, many people around the world suffer from non-consensual filming and distribution of pornographic videos. Once the video becomes available online, it continues to spread indefinitely without the victim’s permission.

This event aimed to introduce the situation faced by victims of non-consensual pornography, including the victims of forced appearances in pornographic videos as well as former porn actresses in Japan whose videos remain available against their will through pornographic websites overseas such as Pornhub. Considering the nature of online space, the event also aimed to explore international efforts to tackle the issue.

This event featured experts from the U.S., the U.K, and Japan, who introduced laws and best practices related to the removal of/ending non-consensual pornographic videos.

Outline:

- Opening Remarks by Hiroko Goto of HRN

- Presentation by Lorna Woods of Essex Law School and Carnegie UK Trust

- Presentation by Sophie Mortimer of Revenge Porn Helpline (20:50)

- Presentation by Michelle Gonzalez of Cyber Civil Rights Initiative (CCRI)

- Presentation by Kazuko Ito of HRN

- Presentation by Cindy Gallop of MakeLoveNotPorn

Opening Remarks by Hiroko Goto of Human Rights Now (HRN)

Hiroko Goto of Japan is a professor of law at Chiba University School of Law and vice president of Human Rights Now (HRN).

Presentation by Lorna Woods of Essex Law School and Carnegie UK Trust

Lorna Woods of the United Kingdom is a professor of Internet Law at the University of Essex Law School and has extensive experience in the field of media policy and communication regulation, including data protection, social media, and the internet. Lorna’s presentation focused on the connection between internet regulation and the sharing of non-consensual intimate images.

Lorna explained that social media can be used as a space where predators can find information to groom and exploit people. This may, for instance, come in the form of publishing false advertisements for ‘modeling jobs’ to pull young women into the porn industry. Social media also makes sharing private material very easy. For example, boyfriends can easily share their girlfriend’s intimate images without consent or post revenge porn after a breakup. As a professor of Internet Law, Lorna sees a considerable overlap between the regulation of the internet and the question of the non-consensual sharing of intimate images.

Development of a ‘Code of Practice’

Lorna has been working on a project that aims to introduce a code of practice against the proposed legal regime in the UK (‘Online Safety Bill’). The approach Lorna and her organization have been taking in the development of the code can be described as a ‘system’s-based approach to regulation’. Lorna described how taking down or removing non-consensual content is not the only issue when regulating image-based abuse. Social media often creates an environment in which these images are shared and thus plays a big part in creating the problem of non-consensual intimate images (NCIIs) and being where the problem is found. The code comprises a four-stage model that tackles all levels of social media engagement.

Firstly, the model looks at content creation. It asks what it takes to create an account or what filters and controls are put onto uploading content. Secondly, discovery and navigation are addressed. Social media platforms’ algorithms often lean towards recommending extreme content to ensure user engagement and keep viewers interested. As Lorna pointed out, (non-consensual) pornographic images perfectly fit that description. In addition, social media’s advertising-driven model encourages platforms to prioritize said extreme content to elicit stronger user responses. Hence, social media is an environment in which non-consensual porn or intimate images can be shared and found by those interested in it. Thirdly, Lorna and her team looked at user response: To what extent can users complain about content? How easy is flagging content as problematic? Finally, the model assesses content moderation. Are there user-friendly complaints mechanisms? What is the usefulness of dropdown menus? Do forms require the filling in of full names and addresses? The last question in particular can be crucial in the takedown of NCIIs, as disclosing personal information may be too much of a risk for victims.

Safety by Design

Lorna called for a ‘safety by design’ approach to social media platforms. When platforms are designing their service, they are responsible for implementing proper risk assessment and content mediation. For instance, platforms could have a system whereby users who want to share pornographic images should at least be identifiable by the platform so that if the images turn out to be a problem, there is a way of tracking the perpetrator. Furthermore, terms and services could ban specific uses of social media platforms, such as deceptive advertisements for the porn industry. Lorna also explained that platforms need to respond better to user complaints and enforce terms of service equally. In this regard, she recommended that social media companies have an appropriately qualified professional in-house who can spot problems and respond to victims of crimes like NCII sharing.

Presentation by Sophie Mortimer of Revenge Porn Helpline

Sophie Mortimer of the United Kingdom is a manager of the Revenge Porn Helpline and StopNCII.org and an expert on intimate image abuse. Sophie’s presentation focused on legal strategies for addressing the sharing of intimate images without consent.

The UK-based ‘Revenge Porn Helpline’ was established in 2015 when it became illegal in the UK to share intimate images without consent. The organization provides support in four main areas: non-consensual sharing of intimate images, threats to share such images, voyeurism, and sextortion (webcam blackmail). Throughout her work at the helpline, Sophie has observed that victims of non-consensual sharing of intimate images (NCIIs) are predominantly female, while the perpetrators are primarily male. She also noted that the sharing of NCIIs does not happen in a vacuum but is tied to a number of other instances of gender-based violence, such as domestic abuse, stalking, doxxing, trolling, collector culture, and more. Therefore, the sharing of non-consensual intimate images or revenge porn is part of the broader systemic violence against women and girls.

The Legal Situation in the UK

Sophie then addressed the flaws in UK law regarding revenge porn and the non-consensual sharing of intimate images. The current law requires an ‘intent to cause distress’ to the victim for a criminal charge to be established, which is difficult to prove to the authorities and serves as a barrier for victims to come forward. Sophie also discussed how the current legislation has defenses written into it that perpetrators can use to avoid a charge. For instance, perpetrators who say that they thought the victim wouldn’t mind sharing the image or that they did it for money are valid defenses under the current law. Moreover, the lack of anonymity in reporting NCII cases discourages many victims from coming forward.

Sophie also pointed out that the definition of a ‘private sexual image’ in the UK law is very narrow. Currently, the definition does not include underwear or altered images. The latter is especially troublesome, as the use of deep fake technology and nudification apps has skyrocketed over the last couple of years. As a result of this narrow definition, countless victims are currently being barred from reporting their NCII cases.

Sophie goes on to explain that since the law was introduced in 2015, only 12% of NCII incidents reported to the police resulted in a charge, and only 6.5% of charges resulted in a prosecution. Even more concerning, only 0.8% of incident reports resulted in a prosecution. Sophie emphasized that these statistics can be deeply discouraging to victims and may even deter them from reporting their own cases.

Recognizing the complexity of the issue, UK lawmakers have appointed the UK Law Commission to review the current legislation. In turn, the commission has produced a thorough report recommending a complete overhaul of the law, which the government plans to implement as a part of the upcoming online safety bill. The four main areas of change correspond with the issues Sophie previously discussed:

- The “intent to cause distress” clause

- Anonymity

- Inclusion of deep fakes or altered images

- The definition of an intimate image

The Launch of StopNCII.org

As of now, Sophie’s organization has helped victims remove over 300,000 non-consensual intimate images. Since removing the non-consensual content is usually an important first step for victims, Sophie and her colleagues have been working closely with social media platforms like Meta to implement removal mechanisms. In 2021, Meta and the ‘Revenge Porn Helpline’ created stopNCII.org, an easy-to-use, free online tool for social media users and adult NCII victims globally to stop the spread of NCIIs. It generates a hash (digital fingerprint) of the non-consensual intimate content directly to a victim’s device. The website then shares this hash bank with social media companies so that they can detect and remove the image. There is also a version of the website geared towards users under 18 called ‘Take It Down.’

Presentation by Michelle Gonzalez of Cyber Civil Rights Initiative (CCRI)

Michelle Gonzalez of the United States is the director of Cyber Civil Rights Initiative (CCRI) and has extensive experience in assisting survivors of sexual violence and vulnerable populations. Michelle’s presentation focused on challenges and best practices in deterring image-based sexual abuse (IBSA) and offering direct services to individuals who experience IBSA.

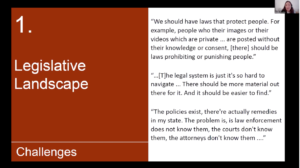

Legislative Landscape

Though Michelle is not a lawyer, Michelle discussed the legislative landscape of the United States in regards to digital sexual abuse. Michelle noted that the US does not currently have a federal criminal law against non consensual explicit image (NCEI) distribution or sextortion, and the patchwork of state laws offered instead is confusing for both survivors and law enforcement. Further, Section 230 of the Communications Decency Act creates high hurdles for charges against website operators who host IBSA.

Michelle discussed that recent CCRI research including people who have experienced IBSA has found that the absence of a federal law in the US does create harm for people who experience IBSA.

Michelle explained that after CCRI President and Legislative and Tech Policy Director Dr. Mary Anne Franks drafted a Guide for Legislators and model state and federal legislation in 2013, multiple states used this law as a template, and the number of US states prohibiting IBSA abuse rose from 3 to 48. A federal bill is also pending.

Community Support/Direct Services

Michelle revealed that attorneys, local law enforcement, and direct service organizations have experienced backlogs in recent years, and that CCRI has experienced a similar skyrocketing in its caseload, serving 5,600 callers in 2022, which is an increase of 87.1% as compared to the 2019 rate. Professional sex workers may face extreme barriers in accessing support due to social stigma, fear of law enforcement, and theft of work products.

CCRI is trying to create tools that can empower the individual with immediate and comprehensive information so that they can have prompt access to resources and information, even if they are waiting or filing multiple reports. For example, the CCRI Safety Center offers step-by-step guidance for individuals 18 years of age and older who experience IBSA and the CCRI Bulletin informs individuals on sextortion scams.

Tech Company Recommendations

For adult entertainment sites:

- Follow protocol for minors where age is indeterminate, meaning that it is difficult to tell by visually inspection whether the individual is over 18 years old

- Disable screenshots and downloads

- Request confirmation of age and consent of individual uploader, all actors, and viewers

- Retain intimacy coordinators in professional production

- Arrive at/enforce global standards

For all tech platforms:

- Design prominent and easy-to-use in-app reporting instead of relying on text-heavy reporting forms

- Respond to reports, including what action was taken in the app (for example, Facebook and Instagram)

- Allow for anonymous and Trusted Flagger reporting

- Consider protections for end-to-end encrypted products

- Block previously identified NCP from being re-uploaded

- Search for/disable repeat catfishing accounts

- Include prominent warnings to deter searches, similar to child safety tools, such as when a user searches for “revenge porn”

- Establish humane working conditions for human content moderators and expand personnel significantly

- Conduct external audit and publish annual transparency reports

Health and Well-Being for Individuals

Michelle explained that individuals who have experienced IBSA avoid distressing feelings through distraction, substance abuse, and social withdrawal, and individuals who do seek support disclose their experiences to family/friends, participate in virtual communities, and seek professional help.

To promote the health of individuals, Michelle recommended that health care intake questionnaires ask whether the assault was recorded, university psychology departments develop curricula and new treatment models to address IBSA, and therapists refer individuals to CCRI’s website.

Presentation by Kazuko Ito of Human Rights Now (HRN)

Kazuko Ito of Japan is the founder and vice president of Human Rights Now (HRN), the first international human rights organization in Japan, and is an accomplished human rights lawyer. Kazuko’s presentation focused on progress and challenges in regards to digital sexual violence against women in Japan.

Violence Against Women in Japan

Kazuko noted that online violence and offline violence against women in Japan are deeply connected and reinforce each other, and there is a prevailing rape culture in Japan.

Forced Recruitment of Women in the Adult Porn Industry in Japan

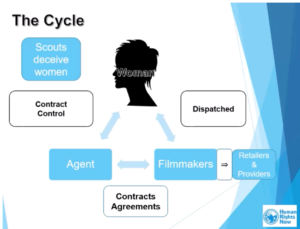

Kazuko expressed that tackling the forced recruitment of women in the adult porn industry in Japan is a key issue for HRN and cited HRN’s 2016 investigative report, which revealed that many young victims were deceived and believed to be signing contracts to become models rather than performers in pornographic videos. Once victims sign the contract, porn agencies demand them to perform in pornographic videos, which is a serious form of violence against women and a human rights violation. If a victim refuses, porn agencies threaten victims with high penalty fees or intimidate them with threats of exposing their actions or images to friends/family. Kazuko asserted that a victim of forced recruitment in the adult porn industry in Japan ultimately has no decision-making power to act or reject any performance.

The structure of entrapment of victims:

Even victims who manage to terminate their contracts with porn agencies may not be able to stop the distribution of pornographic videos that they were forced to appear in, and as a result of psychological distress, may commit suicide.

Despite the forced nature of the recruitment and the filming, Kazuko stressed that agencies are rarely prosecuted, since the contract shows that the victim had given their “consent” to “perform” in the pornographic video.

After the publication of HRN’s 2016 investigative report, many survivors courageously spoke up, which led to changes in society and in policy, such as the establishment of the Inter-Ministerial Committee in 2017, which focused on prevention and awareness-raising among young people.

New Legislation in 2022

Kazuko discussed that due to the growth of the victimization of young women during the Covid-19 pandemic, Japan’s Parliament finally adopted comprehensive legislation to prevent and protect victims of non consensual pornography in 2022. One very important aspect of the Act is the procedural safeguards to prevent non-consensual pornography. For example, Article 3 establishes that a filmmaker shall not force performers into sexual acts, and Articles 4-6 require filmmakers to provide written performance contracts with additional explanatory contracts to the performers. Article 7 further establishes that there must be a one month period between the signing of the contract and the filming of the pornographic video, and Articles 8-9 oblige filmmakers to provide an opportunity for the performer to check the completed product and wait at least 4 months for publication of the video.

Kazuko explained that the new Act has the potential to prevent and end digital violence. In particular, Kazuko stressed Article 13, which establishes that a performer can unilaterally cancel a contract without any reason before publication and until two years after publication, and a filmmaker cannot impose any penalty fee for this cancellation. Articles 14-15 require cessation and prevention of publication either as a result of canceling a contract or at the demand of the performer, and Article 16 establishes that service providers are exempted from liability when they take measures to prevent transmission upon the request of the victim. If certain provisions of the Act are violated, Articles 20-22 provide criminal sanctions.

Kazuko noted that the new Act is significant progress, however, there are some challenges that still exist. For example, compliance of the porn industry to the legislation is a problem, and there is no punishment for those who publish pornographic videos against the performer’s will. Transnational cases of forced appearances where foreign platforms publish Japanese-produced pornographic videos in which victims were forced to appear are especially difficult to solve. Lastly, if victims in Japan want to eliminate their images and videos online, they must go to various governmental bureaus, and the response is often ineffective since the bureaus have no legal authority and can only advise the victims to request operators stop publication.

HRN’s Recommendations

- Strengthen the duty of the government by establishing a supervising authority to enforce the new Act and a one-stop victim support center to eliminate images and videos, such as the one-stop victim support center used in South Korea

- Impose binding duties on relevant industries, such as platforms, service providers, and Big Tech companies, beyond the non-binding UN Guiding Principle on Business and Human Rights

- Tackle transnational cases of forced appearances in adult pornographic videos

- Further develop the Act to recognize the right to be forgotten, expand sanctions, and determine whether certain activity should be regulated or prohibited

- Continue working on the issue of digital sexual abuse to protect victims online

Presentation by Cindy Gallop of MakeLoveNotPorn

Cindy Gallop of the United Kingdom is the founder and CEO of MakeLoveNotPorn who speaks at conferences globally to promote positive sexual values and positive sexual behavior. Cindy’s presentation focused on why she started MakeLoveNotPorn, what it is, and three things that MakeLoveNotPorn does to bring different perspectives to the issue of digital sexual abuse.

The Start of MakeLoveNotPorn

Cindy discussed that she started MakeLoveNotPorn 14 years ago because she experienced herself while dating younger men how porn becomes sex education by default when sex is not discussed in the real world. In its original iteration, MakeLoveNotPorn was based on the construct of porn world versus real world. After presenting MakeLoveNotPorn in a viral TED Talk in 2009, Cindy realized that she had uncovered a huge global social issue. Cindy turned MakeLoveNotPorn into a business designed to do good and make money simultaneously.

What MakeLoveNotPorn Is

Cindy clarified that MakeLoveNotPorn is pro-sex, pro-porn, pro-knowing the difference, and is the first user-generated, 100 percent human-curated, social sex video-sharing platform. The intent of MakeLoveNotPorn is to socialize and normalize sex, to promote consent, communication, good sexual values and behavior, serving as a form of sex education through real world demonstration. MakeLoveNotPorn is designed around a revenue-sharing business model, members pay to subscribe, rent, and stream social sex videos, and half the income of MakeLoveNotPorn goes to contributors, referred to as NotLoveNotPorn stars.

Things MakeLoveNotPorn Does to Bring Different Perspectives to the Issue

- Cindy discussed that since male founders of giant porn industry platforms are not the primary targets of online and offline harassment, abuse, sexual assault, violence, rape, and revenge porn, they did not and do not proactively design for the prevent of this on their platforms. However, at MakeLoveNotPorn, marginalized groups most at risk of digital sexual abuse, including women, Black people, People of Color, LGBTQ+, and the disabled, design safe spaces and safe experiences into the platform themselves. Cindy explained that MakeLoveNotPorn is designed through the female lens as the safest place on the internet with no self-publishing of anything. Curators at MakeLoveNotPorn review every video and comment to make sure the video is consensual and legal, including full identifying details for every participant and the filmmaker, and even just a “bad feeling” is enough reason not to publish a video. Additionally, if a MakeLoveNotPorn star wants their videos removed, it is done instantly without any application form or long process.

- Cindy shared that she designed MakeLoveNotPorn around her own beliefs and philosophies, including her own sexual values. Cindy asserted that while parents often instill good values in children, they forget to translate these good values to their sexual lives, even though values such as empathy, sensitivity, generosity, kindness, honesty, and respect are just as important during sex as in all other spheres of life. MakeLoveNotPorn works to end rape culture by promoting these positive sexual values values and showing how wonderful consensual, communicative sex is in the real world. Cindy revealed that more men show their appreciation for MakeLoveNotPorn than anyone else because it is a safe space on the internet where men can be and watch other men be open, emotional, and vulnerable about sex, offering a solution to toxic masculinity.

- Cindy discussed that she has seen enormous human unhappiness and misery caused by the shame, embarrassment, and guilt by which we imbue sex. Through destigmatizing sex in the real world on MakeLoveNotPorn, Cindy hoped that one day nobody should ever have to feel embarrassed or ashamed about having a naked photograph or a sex video posted on the internet since it is just a natural part of human experience. Cindy shared that MakeLoveNotPorn stars have expressed that sharing themselves on the internet in a completely safe space has helped them process and heal from sexual trauma and abuse.